Although research and experimentation within the scope of Earth observation from space had been conducted before, the first satellites dedicated to remote sensing appeared in orbit around the Earth in the early 1960s. Initially, the missions were short, photographs were taken using analogue technology, and the photographic film containers had to be recovered after a complicated deorbitation process. Nowadays, satellite missions may last even a dozen or so years; photos are acquired by various optoelectronic or radar sensors, and the user may have access to them almost in real time. Impressive technological progress has been made and its fruits – satellite images – can be used almost every day in many fields. It is worth looking a little closer at this technology so that we understand it better and, as a result, benefit from it.

Basic parameters of geo-imaging satellite systems

Satellite images can be described with the use of various parameters. These include:

- spatial resolution,

- temporal resolution,

- spectral resolution,

- the width of the imaging strip,

- radiometric resolution.

For the purpose of this blog post, we will mainly look at spatial and temporal resolution and how they determine the deployability of the Satellite Earth Observation Imagery System to various applications. I will describe the other parameters in more detail in the upcoming materials.

Spatial resolution

It is most often measured in GSD (Ground Sample Distance) i.e. the distance on the surface of the Earth between the centres of fields imaged by two neighbouring pixels of the sensor[1]. It is expressed in meters per pixel (m/pxl). Increasing the resolution of an image, for example twice from 1 m to 0.5 m/pxl, makes the image carry four times more information (a pixel of a photo of 1 m resolution would equal 4 pixels of a photo of 0.5 m resolution). For the purpose of technical specifications, it is most often provided for the area exactly below the satellite, i.e. in NADIR. It is worth noting that in practice this value of this parameter may vary, depending on the angle of inclination of the Earth’s surface in relation to the satellite at the imaged point, on the current relative altitude of the satellite and on the angle at which the image of the surface was taken. Spatial resolution – sometimes also called field resolution – tells you how accurately the photo represents the scene you are imaging, tells you how detailed the photo is, and, consequently, how much you can learn from it. There are 3 levels of image recognition[2]: detection, recognition, and identification. The ability to recognize individual elements of the photographed scene differs; for example, in order to detect a vehicle it is enough for the image to have spatial resolution of 1.5 m/pxl, while to recognize the type of vehicle (to determine whether it is a truck or a tank, for example), one should use an image with a resolution of 0.5m/pxl. To correctly identify (name) the type of vehicle one should have an image with a resolution of 0.3 – 0.15m/pxl GSD. For large objects, e.g. such as bridges, individual degrees of recognition can be obtained at lower resolutions – respectively: detection – 6m/pxl, recognition – 4.5m/pxl, identification – 1.5m/pxl [3]

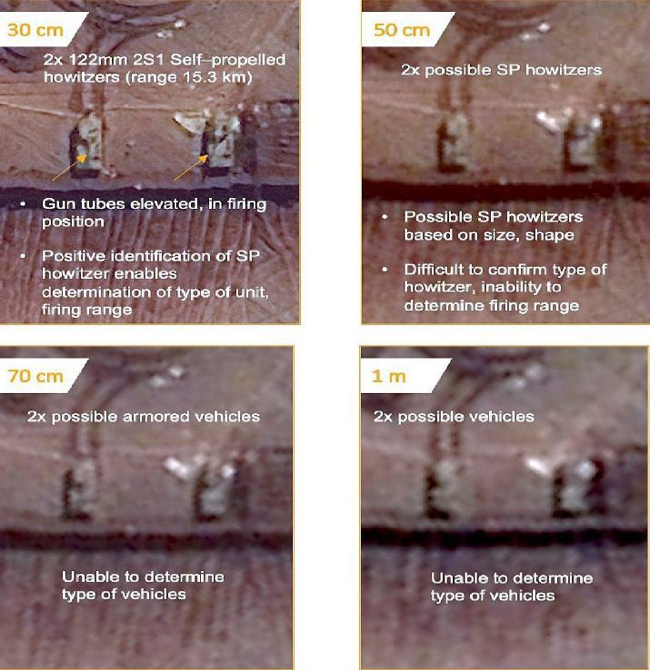

A practical example is provided in the figure below:

Comparison of image quality at different field resolutions and the ability to interpret them. Source: Maxar. Found on: EoPortal Directory, WorldView Legion Constellation [4]

Two vehicles can be detected on a 1 m resolution satellite image, but it is very difficult to determine their type. By slightly improving the resolution to 0.7 m/pxl, it is possible to conclude with some probability that the detected vehicles are armoured. If the resolution of the photo is 0.5 m/pxl, we will be able to say that they are self-propelled howitzers, while the photos with a resolution of 30 cm/pxl allow us to identify the type of vehicle – 2S1 (known in Poland as Goździk). Thanks to the photo we are able to learn that the depicted vehicles are firing 122 mm calibre shells at a maximum range of 15 km and the barrels are raised and ready to fire.

Temporal resolution

This is the period of time that passes between chances of imaging a selected location on Earth. In other words – the temporal resolution tells us how often there will be an opportunity for the satellite (or the entire satellite system) to take a photo of the area of interest. The temporal resolution mainly depends on the number of satellites of the system in orbit, the type, and parameters of the orbits of individual satellites of the system, the latitude of the area of interest, and the manoeuvrability of the satellites in orbit. To a lesser extent, the temporal resolution depends on the imaging bandwidth of the satellites.

The following describes the above parameters in more detail:

- The number of system satellites operating in orbit. Of course, the more satellites in the system constellation, the more often there is a chance of repeating a shot of the scene.

- The type and orbit parameters of the various satellites. Optoelectronic satellites are usually placed on Sun-synchronous orbit orbits (SSO). These are orbits in the case of which the planes run in the vicinity of the polar circles, with inclinations in the range 97ο – 99.5ο; specific parameters are selected in order for them to appear over a given latitude at exactly the same time of day. Alternatively, satellites of this type are placed in mid-inclination orbits. The trajectory of their flight means that they can only image areas between latitudes limited by the inclination of the orbit, but the time taken for these areas to be visited again by the satellite is significantly improved, although individual visits occur at different times of the day.

- The latitude of the area of interest. The higher the latitude (north or south) of the selected area, the more often satellites will have an opportunity to take an image of it.

- The possibility to manoeuvre satellites in orbit. Satellites can change their orientation in orbit thanks to advanced navigation and flight control systems. This is done by tilting the satellite (the satellite – NADIR axis point) by several dozen degrees, so the satellites can take an image of the area of interest located even a few hundred kilometres from the route of their flight with positioning accuracy up to several metres. However, it should be noted that images taken at a significant deviation from NADIR are characterized by a significant degradation of spatial resolution. For example, a satellite in Sun-synchronous orbit taking images at the NADIR point with a resolution of 0.5 m/pxl will return over a selected point on the equator approximately only every 15 days; numerous places on the equator will be entirely out of the range of the lens, taking pictures with the maximum 45ο deviation from the NADIR axis the temporal resolution will improve to approximately 2.5 days, all points on the equator will be in range, but the images with the most demanding attempt will reach a detail of only about 1.5 m/pxl GSD[5]. If an area in Poland is selected, using maximum manoeuvrability, it can be imaged by a single satellite in Sun-synchronous orbit every 1.5 days on average.

To sum up – reducing temporal resolution to a single parameter is a significant simplification, it is worth making sure what assumptions were made during its determination. Typically, the revisit time is indicated for a point on the equator and with the use of maximum satellite capabilities to take images at an angle from the NADIR – Zenith axis, which is done at the figurative expense of the actual spatial resolution achieved.

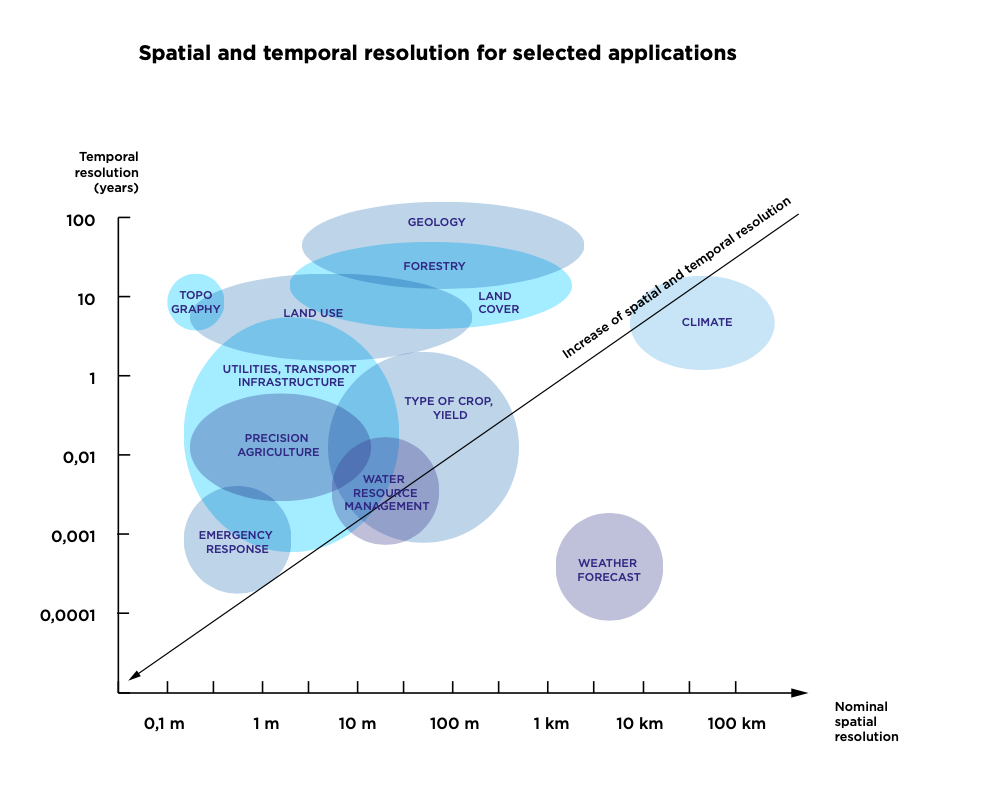

The possibility of using the Earth observation satellite system for different applications, depending on the spatial and temporal resolution parameters obtained.

Earth observation satellite systems are deployed in many fields such as climate research, weather forecasting, geology, forestry, land use/cover studies, topography, monitoring of utilities, transport infrastructure, precision agriculture, crop identification, water resource management and emergency response (among others). The chart below shows the parameters of satellite imagery used for various applications.

Source: P. S. Thenkabail, Remote Sensing Handbook – Three Volume Set, Vol. I, p. 68.

In case of weather forecasting, it is particularly important that the data is up-to-date; the spatial resolution are not as significant. Therefore, images for weather forecasts are frequently provided by satellites on geostationary orbits, which remain in a fixed position relative to a point on the Earth, however, due to the distance from the Earth (more than 35 thousand km) they can generate images only in low resolution.

Satellites with medium spatial resolutions – usually above 10 m/pxl are used to study crop types and yields, geology as well as partly for water resource management in forests and geology. An example of such satellites are the Sentinel 2 satellites of the European Copernicus Programme. Single satellites are sufficient for these purposes, as revisit times for these applications are relatively infrequent. It is enough for the satellites to take an image once or several times a year.

High-resolution (HR) satellites, ensuring GSD (Ground Sampling Distance) accuracy in the 1-10 m/pxl range, are also used for crop survey, precision agriculture, land use and cover, utilities, and transportation infrastructure. For these applications, to some extent, it is worth creating small satellite constellations (consisting of, for example, a pair of satellites) to reduce the revisit time to a few times per month.

Very High Resolution (VHR) satellites, which have an imaging capability of less than one meter per image pixel, are ideal for collecting data on utilities, infrastructure, but also for detailed research conducted in forestry or precision agriculture. The great advantage of very high resolution satellites is that they can be used in emergency response as well as for defence sector purposes. These satellites relatively often have narrow imaging strips – approximately a few kilometres, so in order to improve their ability to update images, systems consisting of several or even more than a dozen satellites are established in orbit.