Any commonly used IT system consists of multiple layers of software: starting with the drivers, through the operating system, to the user software. Its various components are created by different organisations. The logical corollary is that we must give each of those organisations some credit of trust. We need to believe not only that their intentions are pure, but also count on their software being free of security vulnerabilities. Therefore, by using any software, we show a two-fold trust.

Imagine that you want to create a medium-sized data communication system. First, you will be selecting components and tools. How should you approach this task while maintaining a high level of safety and common sense? Let’s look at this problem from the point of view of someone who wants to not only secure the system, but also reduce the degree of reliance on external components. We’ll start with operating system selection, then containerisation, and end with external packets.

Operating system

Those with trust issues should steer toward transparency. Therefore, the first choice will be to select an operating system that has open source code. Taking that into consideration, we know that the best choice will be Unix systems. However, now we need to consider which distribution you intend to use. A quick comparison of job postings showed that the most commonly used Unix-based server distributions are Debian, Ubuntu, and CentOS. Therefore, we will focus on them later in this article.

Distribution comparison

One of the key factors in the security mix of the listed Linux distributions is the update policy, when new vulnerabilities are discovered. Typically, for Debian and Ubuntu vulnerabilities are mitigated at similar times. Ubuntu is a fork of Debian, but the packets update themselves independently.

The case is slightly different for CentOS which uses packets from the Red Hat Enterprise Linux (RHEL) distribution. Therefore, up to 72h delay is guaranteed. According to CentOS website, updates are usually done within 24h [1]– CentOS packet update delay relative to RHEL. Another issue is that CentOS 8 will lose its support at the end of this year. However, we will discuss this issue later.

We will conduct a simple experiment and investigate the response rate to the vulnerability labelled CVE-2021-3156. This is a heap overflow vulnerability that allows escalation of permissions in the systems in question. According to the CVSS v3, this vulnerability is regarded as “High”. The vulnerability information was made public on January 26, 2021. Packet update for Debian [2]and CentOS [3]was released on January 20, for Ubuntu [4]the update was ready a day earlier. As you can see, the response times were very similar. When considering similar cases, one can conclude that the time to fix vulnerabilities varies little between distributions. Of course, there are small deviations in selected cases, but this has no apparent effect in relation to the whole system.

In terms of packet trust, it is also important that Debian uses “Reproducible Builds”. This means that (according to the Debian wiki): for each packet, it should be possible to recreate it byte-by-byte. In practice, this means that we have more confidence that no additional, potentially unwanted code was added at the source code compilation stage. Of course, it is not possible for every Debian user to try to reproduce every packet that is installed. But it only takes one person to detect such an incident and alert others. This approach by the Debian developers further increases trust in this distribution. At the time of writing this article, Ubuntu and CentOS did not have similar mechanisms. Debian also stands out if we compare the teams responsible for the distributions in question. Debian is a project maintained by a community distributed around the world. Commercial companies are largely responsible for the development of the other two.

An important issue regarding trust and privacy is the controversy that surrounded the Canonical company during the development of older versions of Ubuntu Desktop. Namely, Ubuntu version 12.10 by default sent local searches to external servers in order to share them with Amazon. That way, Amazon could better tailor its ads. Granted, there was an option to disable this “functionality”, but it shouldn’t have been enabled by default in the first place. This kind of actions have been heavily criticized [5]. As of Ubuntu 16.04 this mechanism has been disabled by default. Of course, the problem was with Ubuntu Desktop, not Ubuntu Server, which is the more important point of interest when from this article point of view. However, this situation had an impact on the trust we put in Canonical, as a company responsible for both Ubuntu versions.

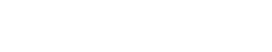

It’s also worth to mention CentOS, as the only system that has SELinux enabled by default. Of course, you can install this tool in other distributions as well, but the default inclusion is a positive aspect considering the system to be used as a production environment. On the other hand, it is a bit sad that the 5 most popular results (in Polish, using the most popular search engine) for the phrase “centos selinux” return information about how to disable SELinux:

Finally, let’s return to the issue raised a few paragraphs ago. The CentOS project will soon cease to be supported, and its successor is expected to be CentOS Stream. Until now, CentOS has been a downstream distribution relative to RHEL. This guaranteed high system stability, which is one of the most important factors in production environments. CentOS Stream will change this, as it will serve as the “development branch” [6]for the RHEL system. Red Hat’s website additionally states that “making CentOS Stream a preview of future Red Hat Enterprise Linux releases” [7]. This raises questions about the usability of the system in environments that require high stability.

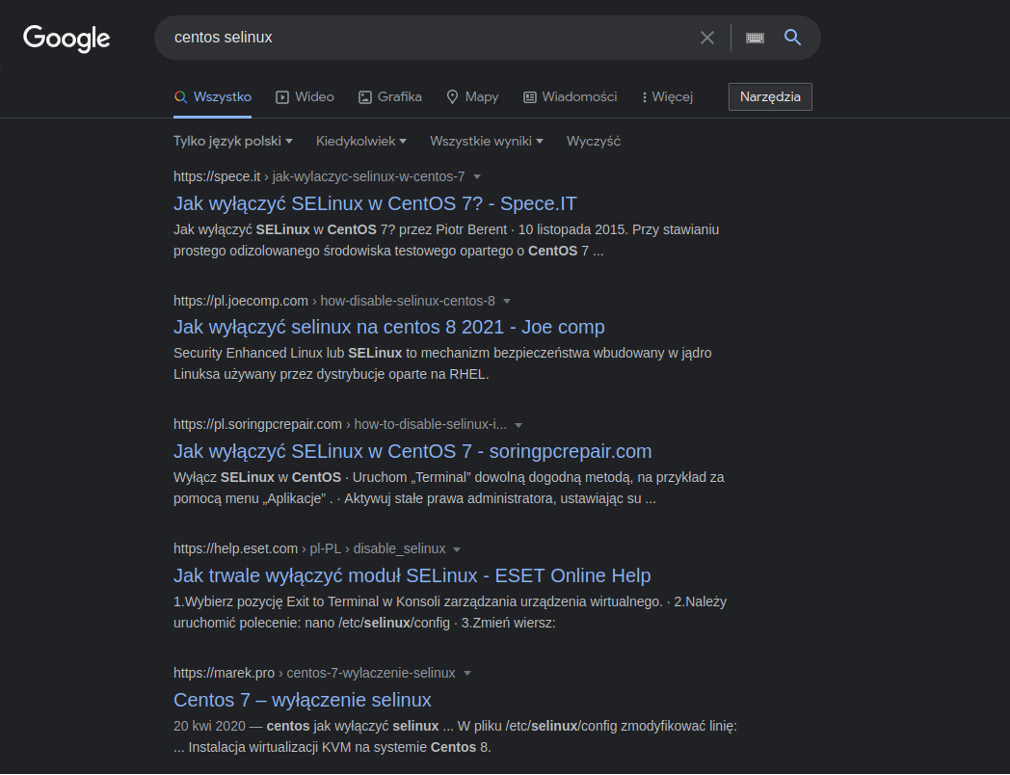

An alternative is the Rocky Linux distribution, which saw its first release in April 2021. The person responsible for creating the project is one of the co-founders of CentOS. Rocky is presented as a distribution to replace the CentOS project. For example, a quick check showed that the latest CentOS 8.4 and Rocky Linux 8.4 use an identical kernel version:

To sum up, the conclusions presented may favour the choice of a particular distribution – of course, we consider issues of trust in the system as well as the organisation itself that creates it. However, from the security point of view, the systems represent a similar level. Therefore, it is the person administering a given system that is most important for security, not the selection of a system from the pool in question.

System Hardening,

Now that the system selection is over, it’s time for hardening. The most accurate solution is to use compliance with the CIS benchmark [8]. For each type of server, there is a separate benchmark that presents a set of stringent requirements to ensure a high degree of security for production systems. The requirements are categorized and, in the case of Linux systems, relate to hardening of:

- Services – primarily disabling unnecessary services

- Network – traffic filtering, network segmentation

- Logs and audits – configuration of auditd, rsyslog or similar, depending on your system

- Access control, authentication and authorisation

- Others, including SELinux configuration, proper partitioning, secure booting

Manually ensuring compliance with the CIS benchmark would be extremely time consuming and tedious. Therefore, some security scanners provide the ability to automatically check the recommendations suggested in the benchmark. Nessus or Greenbone solutions can be used for this purpose.

When you don’t have the resources to provide the highest level of security, it is also possible to use tools that will only check for the most common and easy to detect security issues. For this purpose, the Lynis program can be used [9], which also focuses on system hardening. It can detect security vulnerabilities not only in the system configuration but also in the configuration of common services. It is important to be able to check whether the installed packets have known vulnerabilities. On the other hand, if we only want to check the possibility of permission escalation, the tools designed for Red Teaming will be most helpful. Some of the most popular are LinEnum [10]and LinPEAS.

This is probably obvious, but it’s worth adding that just running a CIS benchmark or security audit tool won’t do anything if you don’t apply the received recommendations. We can implement recommendations manually or automate part of the process. A lot of time can be saved by using Ansible scripts which will automatically apply recommendations universal to Linux systems or defined specifically for a particular distribution. Scripts can be run on subsequent production servers.

Virtualisation

Does anything change in case of OS virtualisation? From a security perspective, this is an additional layer of security. However, keep in mind that this is no reason to neglect the security of a virtualised system. After all, we try not to run vulnerable web applications, hoping that WAF alone will constitute a sufficient protection. Virtual machines have their own kernel, and escaping from such an environment can effectively deter a potential intruder. It is true that hypervisor-specific vulnerabilities are published, but it is unlikely that someone in our environment will try to run an exploit that is worth quite significant amount of money[11][12].

Therefore, special care should be taken to keep our virtualisation software up to date. In contrast, the internal network is the most promising attack vector when there is no escape from the virtual environment. Therefore, you should pay attention to the hardening capabilities of the host network and the selection of an appropriate network adapter in the hypervisor.

Also worth mentioning is the Vagrant tool, which has become quite popular over the years. In this case, it is easy to take care of the proper configuration of the virtual machine to be replicated. The most important thing is to verify that the virtual machine (box) is trusted. Therefore, it is suggested to use the collections of official recommended boxes. Interestingly, Vagrant’s developers state that there are only two sets of officially recommended boxes: hashicorp and bentoo [13].

The disadvantage of Vagrant is that the default running VM has a low level of configuration security. Therefore, as soon as the system starts, it should be properly sharded. For example, the default configuration creates a vagrant user that has a vagrant password. There are more similar “facilitators”, so they need to be identified and modified to increase security.

Containerisation

Most applications today use containers. Such a solution allows you to logically separate system components while maintaining native speed. There are several solutions to enable containerisation. This could be lxd, podman, or the most popular – Docker. In this chapter, because of its popularity, we will focus on the last one.

Container – identity theft

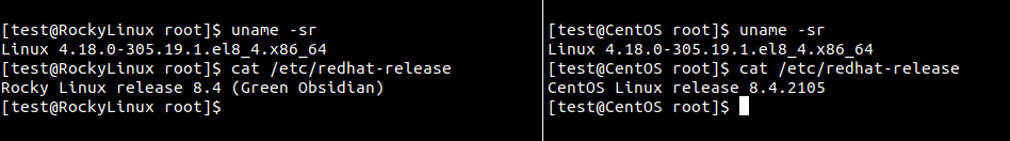

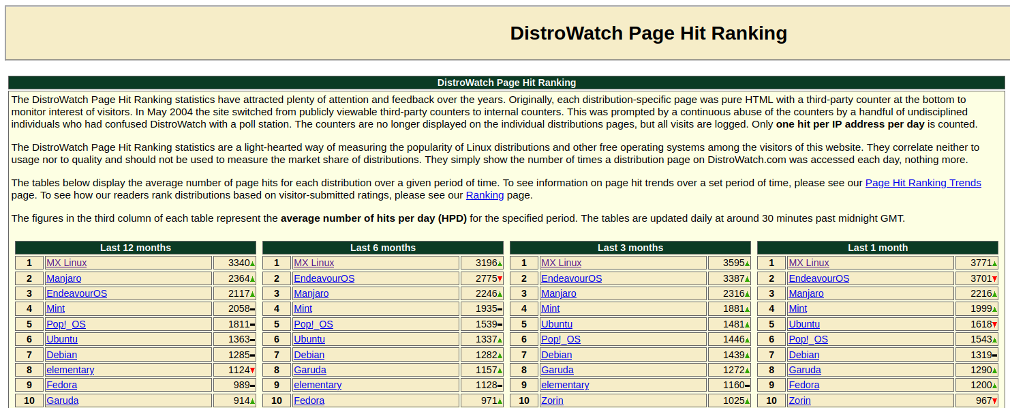

Let’s begin with the dangers tarting images as always trustworthy. In many cases, it is difficult to confirm the reliability of a given image. For a simple test, a new account named mxlinux was created in the dockerhub portal [14]. An image of the MX Linux distribution and the project’s website address were used to authenticate the profile. No containers were intentionally created, as this could be considered a malicious activity.

It should be added that MX Linux is considered the distribution generating the most interest by the DistroWatch website [15]. Therefore, an attack using a fake Docker profile could be extremely successful.

Of course, there are many ways to steal identities on portals like this. Other profiles named “googlealphabet” and “w3af” (the name of a popular security scanner) were also created for testing purposes. Properly crafted profiles should attract unsuspecting users. Once a fake account is created, the attacker can place a malicious container to gain access to victims’ internal networks.

How to counter attacks of this type? One mechanism is Docker-verified profiles, marked with a special “Verified Publisher” tag. An additional method is to verify that links to a given Docker profile appear on the pages of the organization to which the account is supposed to belong. More detailed recommendations for trusted images can be found in the Docker documentation [16].

An identical attack can be performed using the aforementioned Vagrant. It is even an easier target for attack due to the fact that Vagrant is less popular than Docker. Therefore, more names are unreserved. For testing purposes, it was possible to create profiles named “MIT” or “RedHat”. From the perspective of an uninformed programmer/devops, the names of reputable universities and international companies should gain special trust.

How to secure Docker

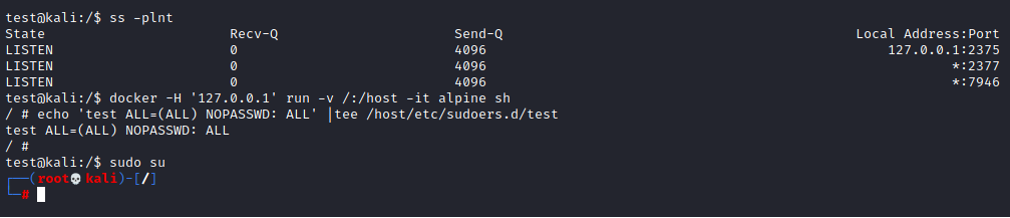

Applying good security practices to Docker is a laborious task. There are many aspects that need to be foreseen first. Perhaps the most well-known threat is an unsecured socket. Escalation of permissions in this case is simple:

It is also often cited as a problem that containers are started by default as the root user. This is a Docker-specific case because lxd and podman do not use administrator permissions inside the container by default. This is one reason why Docker is considered a less secure containerization tool.

A separate issue is the need to update the containers and the software used by the containers. This is especially true if the container uses software not accessible from the packet manager. Care should be taken that such software is manually updated.

Containers use the same kernel as the host. Therefore, resource separation is essential. To do this, Docker uses the namespaces mechanism provided by the kernel. Exploiting the vulnerability in the kernel will escalate container escapes [17]. So be sure to keep the host system kernel up to date.

A set of recommendations ([18][20]) can be represented as a list:

- Use trusted Docker containers

- Regularly rebuild containers to eliminate known vulnerabilities

- Protect Docker daemon socket

- Use low-level accounts inside containers by default

- Use the lowest permissions possible, applies primarily to:

- linux capabilities

- network segmentation

- limitation of available resources with the use of cgroups (protection against DoS attack)

- restricting permissions of containers through apparmor or seccomp policies

- access to the host file system from the container level

- Limit the storage of secrets inside the container (especially do not store unsecured passwords and keys in the file system)

- Do not use the -privileged flag, do not disable separated namespaces by using the flags -net=host, -pid=host, etc.

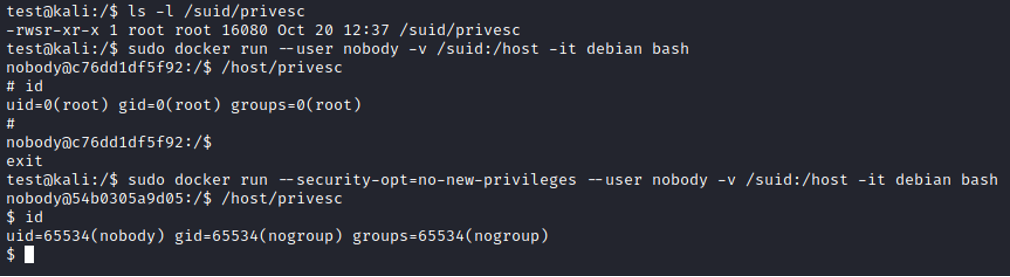

Best practices [19]. also recommend adding the -security-opt=no-new-privileges flag, which disables the SUID bit inside containers. Its process can be seen in a simple example:

Considering the number of recommendations made, one might be tempted to say that Docker is like cookies in the world of web applications. Both solutions offer a relatively low level of security by default, but with the right configuration you can be sure of their effectiveness.

Software

External code

So, we chose the operating system and containers. We started to create our own project, but as it grew, it became necessary to use packets created by other users. At this point, too, we should stop and consider whether our customs are appropriate. We definitely should not show unlimited trust in GitHub repositories. A random hint from the Internet is that we should check every piece of code [21]. However, checking each piece of software line by line is not an optimal approach. Therefore, it makes sense to establish your course of action.

The problem should be looked at from two sides. We should first verify whether the author did not have a malicious use in mind. If the project is popular, there is a high probability that someone has previously analysed the code. It is therefore worth looking for a popular substitute for a little-known packet. It’s also a good idea to find the author’s other projects and see if they are recognized online. This can also increase our trust in the software. Of course, the best idea is to analyse the code we are going to use. However, depending on the project, this may not always be possible within a certain time limit. In this context, it is useful to check for known issues with the repository. If the repository actually contains a malicious code, it is possible that someone has started a discussion about it. In this way, we hope that someone before us has verified the packet in question.

With the help of analysis of known issues, it is also possible to detect security vulnerabilities in the source code. This brings us to the second problem: does the repository have known or unknown vulnerabilities? Addressing this problem is even more difficult. The solution is to monitor the security of the packet (dependencies), just as much as our own, proprietary code. Of course, it is easier if the repository belongs to a well-known organization that pays attention to security patches. Here we come once again to the topic of recurring updates. Using components with known vulnerabilities was ranked a high sixth place in the OWASP Top 10:2021 [22]. This is a “promotion” of three places relative to the OWASP Top 10:2017.

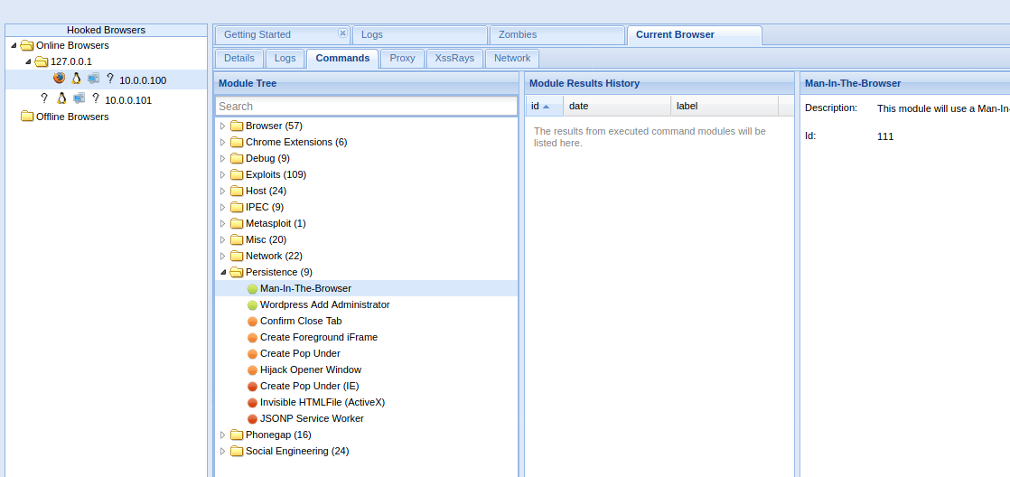

Rather, developers are aware that they shouldn’t use untrusted server-side components. However, it is interesting to see how common it is for Web application frontends to use JavaScript from external, often untrusted servers. In this case, malware monitoring is much more challenging. After all, we have no assurance that the Javascript code will not suddenly be modified. Injecting custom JavaScript code in the context of a user’s session will enable session takeover and may even lead to a successful attack on the victim’s infrastructure. To carry out attacks of this type, there are tools [31]that allow managing the browsers of multiple victims and offer ready-made payloads. One of them is the option to swap links on a page in such a way that an attacker gets a persistent session. When combined with a keylogger, the results can be quite impressive.

Injecting external JavaScript code into our site is de facto making our application’s security dependent on the security of the other servers that host those scripts. Running external JavaScript code also raises privacy concerns. Therefore, it is good practice to serve JavaScript only from a server that is under our control. From an informed user’s perspective, blocking JavaScript by default with a browser add-on is a good move.

Packet replacement

The cases considered so far have involved a situation where we have analysed whether the software is trustworthy. In contrast, in this subsection, we will consider the situation where an attacker has planted malware. One of the more common attacks of this type is called typosquatting. The simplest idea presented is to install malware on the victim’s computer who misspells the packet name. A good example is the “cross-env” package available from the npm package manager. When typing the name of this package from memory, it is easy to get confused and miss the hyphen. The attacker have foreseen that this situation may take place and published its own packet called “crossenv” [23]. Workstations that download this packet will get infected. Another example would be a popular Python library called “urllib3”. If we get the name wrong and type “urlib3” or “urllib” instead, we are again the target of an attack [24]. At least, that was the case a few years ago, as the listed anomalies were identified and withdrawn from the repositories. Of course, we can still expect that similar cases exist but have not yet been detected.

A similar, incredibly interesting attack vector is a relatively new type of threat called “dependency confusion”. The targets of the attack are organizations that have their own internal packet repositories. Private packet repositories may have packets that are not publicly available. But what happens if an attacker publishes a malicious packet with the same name as a packet available only from our internal repository? In that case, a few things can go wrong. First of all, during the packet installation we may forget to include our repository url and this way we will download a malicious package from the public repository by default. There are various similar situations, but in general the problem is with incorrect environment configuration. Next, some packet managers, given a choice between a higher and a lower version of the packet from our repository, may choose the first option. And this will lead to compromising our system. More detailed descriptions of “dependency confusion” can be found at [25]. The information on impact of exploiting vulnerabilities is available at [26].

A contribution worthy of the article

It is often the case that the software you want to use is not available from the packet manager. In this case we need to make a decision. Look for a packet that is ready to launch? Or compile the source code ourselves – which will take some time? In this case, the answer is simple. If we have any doubts about the origin of the packet, it is definitely worth taking the time to compile our own. This way we will be sure that there is no malicious code added to the packet. However, there is a possibility that the backdoor was intentionally placed in the source code.

Open source software typically has multiple contributors. They may come from different organisations and have various intentions. As a matter of interest, consider what happens if one of the contributors turns out to be malicious. Such a study was conducted by a US university. The target of the crafted attack was the Linux kernel (!). Due to the unethical nature of the study, I will not say which university it was. I will also not provide any links to the article published based on the study. I will however mention that the university in question has been excluded as a contributor to the kernel.

The context of the event was that this university wanted to see how far malicious commits would be able to go. The attack was carried out by several PhD students. Their code introduced intentional vulnerabilities to the Linux kernel. According to the mailing lists, the crafted patches have reached branches marked as “stable” [27][28]. The unethical nature of the study was that the developers were not informed that they were participating in it. The incident described can be compared to RedTeam operations in which an unaware customer is not informed that they have been attacked. Such actions cannot be condoned, so it is not surprising that the university was excluded as a contributor. Nevertheless, it is important to keep in mind that a similar attack vector can be exploited in an undetected way in publicly available software.

Summary

This article addressed the issues of ensuring the security of the various components of the system, the purpose of which is to serve as a production environment for our application. We proposed implementation of the best security practices that were originally formulated by industry authorities. In addition, ways to enable the application of the principle of limited trust are explored.

We should always remember to verify the integrity of the software that we have downloaded ourselves. Resilient cryptographic hashes, such as SHA512, should be used for this purpose. This applies to any type of software: drivers, operating system, packets, etc. CentOS is a good example of this – it can be downloaded by default using http [30]. None of the Polish mirrors force encryption by default. Therefore, modification of network traffic by Man in The Middle attack can easily result in complete compromise of our system.

Taking into consideration all of the above, we can conclude that we have built quite a safe system. Therefore, it could be unfortunate if all our efforts were wasted by sending open source code through the net. In a possible follow-up article, we will address the human factor and secure secret management. In the meantime you can see what are the best practices regarding handling passwords [29].

Links

[1]https://wiki.centos.org/FAQ/General

[2]https://debian.pkgs.org/10/debian-main-amd64/sudo_1.8.27-1+deb10u3_amd64.deb.html

[3]https://centos.pkgs.org/7/centos-updates-x86_64/sudo-1.8.23-10.el7_9.1.x86_64.rpm.html

[4]https://ubuntu.pkgs.org/20.04/ubuntu-updates-main-arm64/sudo_1.8.31-1ubuntu1.2_arm64.deb.html

[5]https://www.gnu.org/philosophy/ubuntu-spyware.en.html

[6]https://www.centos.org/cl-vs-cs/

[7]https://www.redhat.com/en/topics/linux/what-is-centos-stream

[8]https://www.cisecurity.org/cis-benchmarks/

[9]https://github.com/CISOfy/lynis

[10]https://github.com/rebootuser/LinEnum

[11]https://twitter.com/cbekrar/status/710428332848914432

[12]https://www.eweek.com/security/pwn2own-researchers-reveal-oracle-vmware-apple-zero-day-exploits/

[13]https://www.vagrantup.com/docs/boxes

[14]https://hub.docker.com/u/mxlinux

[15]https://web.archive.org/web/20211015194545/https://distrowatch.com/dwres.php?resource=popularity

[16]https://docs.docker.com/engine/security/trust/

[17]https://www.youtube.com/watch?v=BwUfHJXgYg0

[18]https://docs.docker.com/engine/security/

[19]https://cheatsheetseries.owasp.org/cheatsheets/Docker_Security_Cheat_Sheet.html

[20]https://snyk.io/blog/10-docker-image-security-best-practices/

[21]https://www.quora.com/Can-you-trust-everything-on-GitHub-not-to-be-malicious

[22]https://web.archive.org/web/20211001224020/https://owasp.org/Top10/

[23]https://snyk.io/blog/typosquatting-attacks/

[24]https://snyk.io/vuln/SNYK-PYTHON-URLLIB-40671

[25]https://snyk.io/blog/detect-prevent-dependency-confusion-attacks-npm-supply-chain-security/

[26]https://medium.com/@alex.birsan/dependency-confusion-4a5d60fec610

[27]https://lore.kernel.org/linux-nfs/CADVatmNgU7t-Co84tSS6VW=3NcPu=17qyVyEEtVMVR_g51Ma6Q@mail.gmail.com/

[28]https://lore.kernel.org/linux-nfs/YIAta3cRl8mk%2FRkH@unreal/

[29]https://krebsonsecurity.com/password-dos-and-donts/

[30]http://isoredirect.centos.org/centos/8/isos/x86_64/

[31]https://github.com/beefproject/beef